Warning

Vulcanexus distribution is not currently available, but it will very soon. To follow this tutorial, a

ROS 2 galactic Docker image may be used instead, installing

DDS Router whenever required. Please also take into account that environment variable RMW_IMPLEMENTATION must

be exported so as to utilize Fast DDS as middleware in ROS (see Working with eProsima Fast DDS).

1. Vulcanexus Cloud¶

Vulcanexus is an extended ROS 2 distribution provided by eProsima which includes additional tools for an improved user and development experience, such as Fast-DDS-Monitor for monitoring the health of DDS networks, or micro-ROS, a framework aimed at optimizing and extending ROS 2 toolkit to microcontroller-based devices.

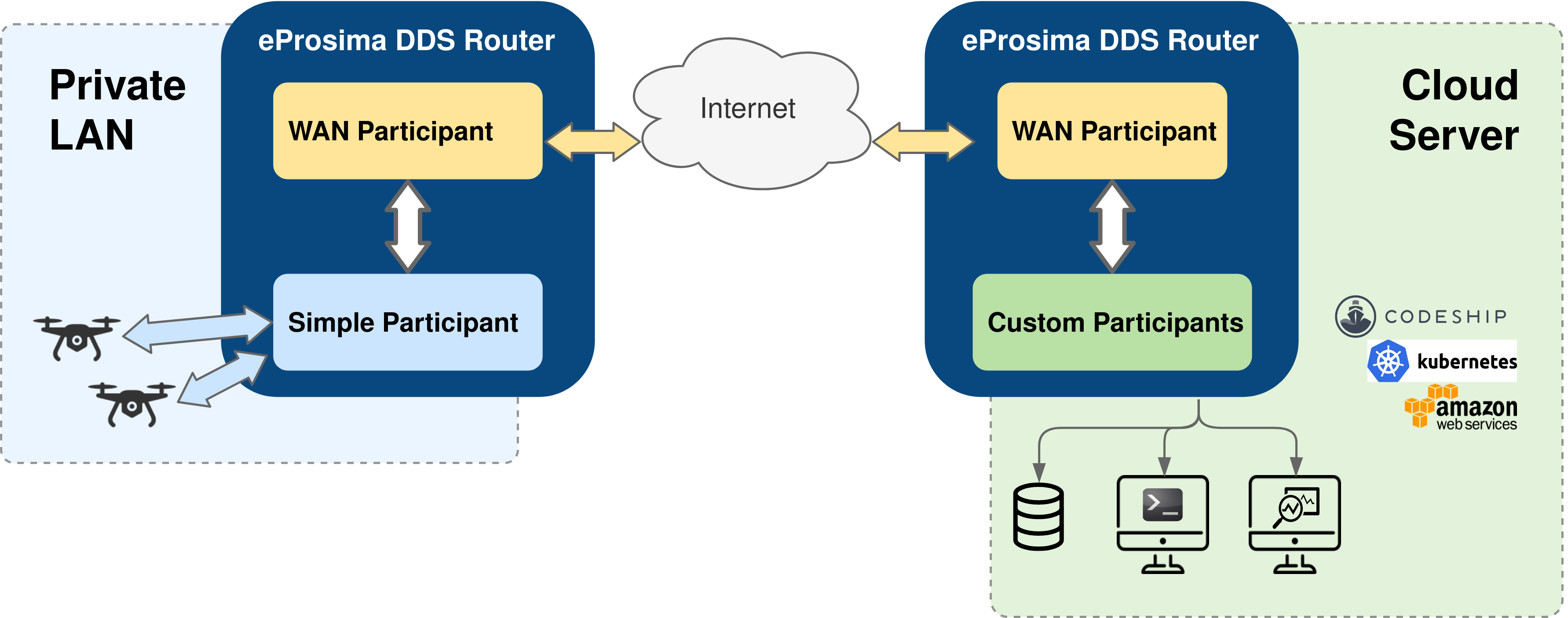

Apart from plain LAN-to-LAN communication, Cloud environments such as container-oriented platforms have also been present throughout the DDS Router design phase. In this walk-through example, we will set up both a Kubernetes (K8s) network and local environment in order to establish communication between a pair of ROS nodes, one sending messages from a LAN (talker) and another one (listener) receiving them in the Cloud. This will be accomplished by having a DDS Router instance at each side of the communication.

1.1. Local setup¶

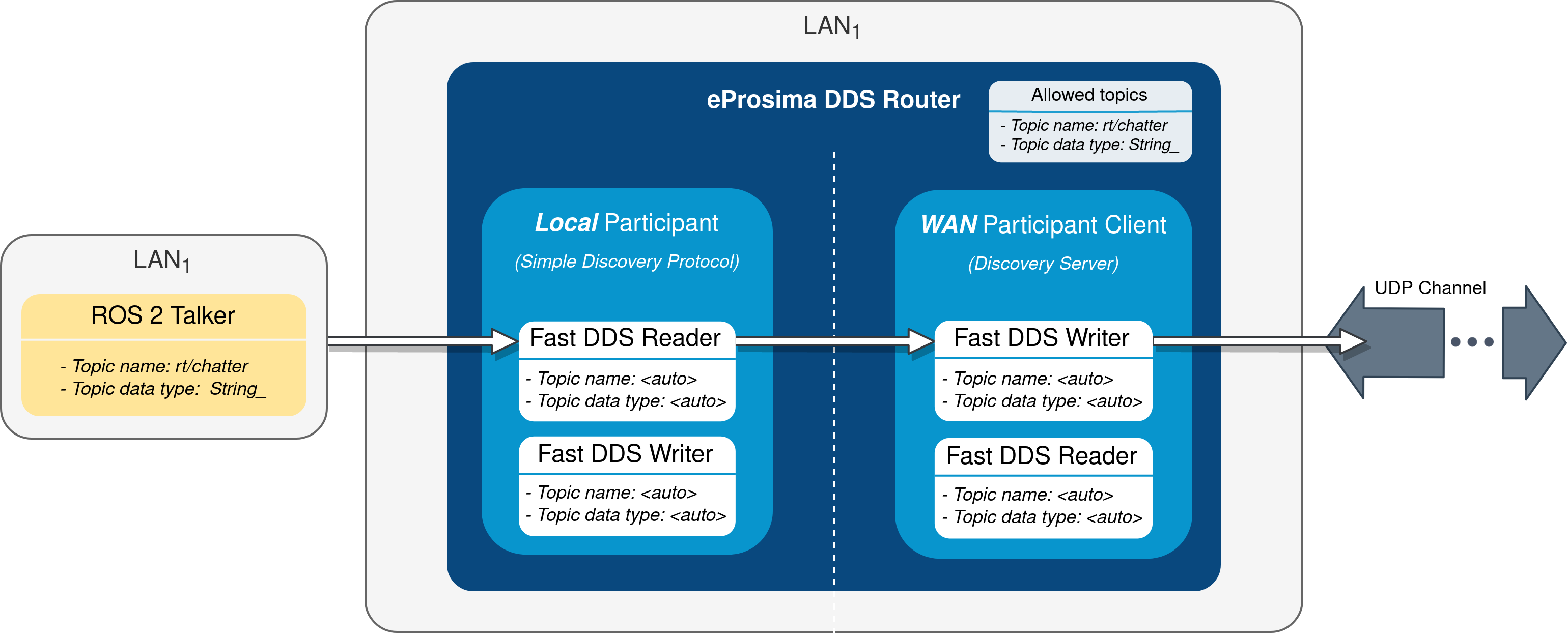

The local instance of DDS Router (local router) only requires to have a Simple Participant, and a WAN Participant that will play the client role in the discovery process of remote participants (see Discovery Server discovery mechanism).

After having acknowledged each other’s existence through Simple DDS discovery mechanism (multicast communication), the local participant will start receiving messages published by the ROS 2 talker node, and will then forward them to the WAN participant. Following, these messages will be sent to another participant hosted on a K8s cluster to which it connects via WAN communication over UDP/IP.

Following is a representation of the above-described scenario:

1.1.1. Local router¶

The configuration file used by the local router will be the following:

# local-ddsrouter.yaml

allowlist:

[

{name: "rt/chatter", type: "std_msgs::msg::dds_::String_"}

]

SimpleParticipant:

type: local

domain: 0

LocalWAN:

type: wan

id: 3

listening-addresses: # Needed for UDP communication

[

{

ip: "3.3.3.3", # LAN public IP

port: 30003,

transport: "udp"

}

]

connection-addresses:

[

{

id: 2,

addresses:

[

{

ip: "2.2.2.2", # Public IP exposed by the k8s cluster to reach the cloud DDS-Router

port: 30002,

transport: "udp"

}

]

}

]

Note that the simple participant will be receiving messages sent in DDS domain 0. Also note that, due to the choice

of UDP as transport protocol, a listening address with the LAN public IP address needs to be specified for the local WAN

participant, even when behaving as client in the participant discovery process. Make sure that the given port is

reachable from outside this local network by properly configuring port forwarding in your Internet router device.

The connection address points to the remote WAN participant deployed in the K8s cluster. For further details on how to

configure WAN communication, please have a look at WAN Configuration.

To launch the local router from within a Vulcanexus Docker image, execute the following:

docker run -it --net=host -v local-ddsrouter.yaml:/tmp/local-ddsrouter.yaml ubuntu-vulcanexus:galactic -r "ddsrouter -c /tmp/local-ddsrouter.yaml"

1.1.2. Talker¶

ROS 2 demo nodes is not installed in Vulcanexus distribution by default, but one can easily create a new Docker image including this feature by using the following Dockerfile:

FROM ubuntu-vulcanexus:galactic

# Install demo-nodes-cpp

RUN source /opt/ros/$ROS_DISTRO/setup.bash && \

source /vulcanexus_ws/install/setup.bash && \

apt update && \

apt install -y ros-$ROS_DISTRO-demo-nodes-cpp

# Setup entrypoint

ENTRYPOINT ["/bin/bash", "/vulcanexus_entrypoint.sh"]

CMD ["bash"]

Create the new image and start publishing messages by executing:

docker build -t vulcanexus-demo-nodes:galactic -f Dockerfile .

docker run -it --net=host vulcanexus-demo-nodes:galactic -r "ros2 run demo_nodes_cpp talker"

1.2. Kubernetes setup¶

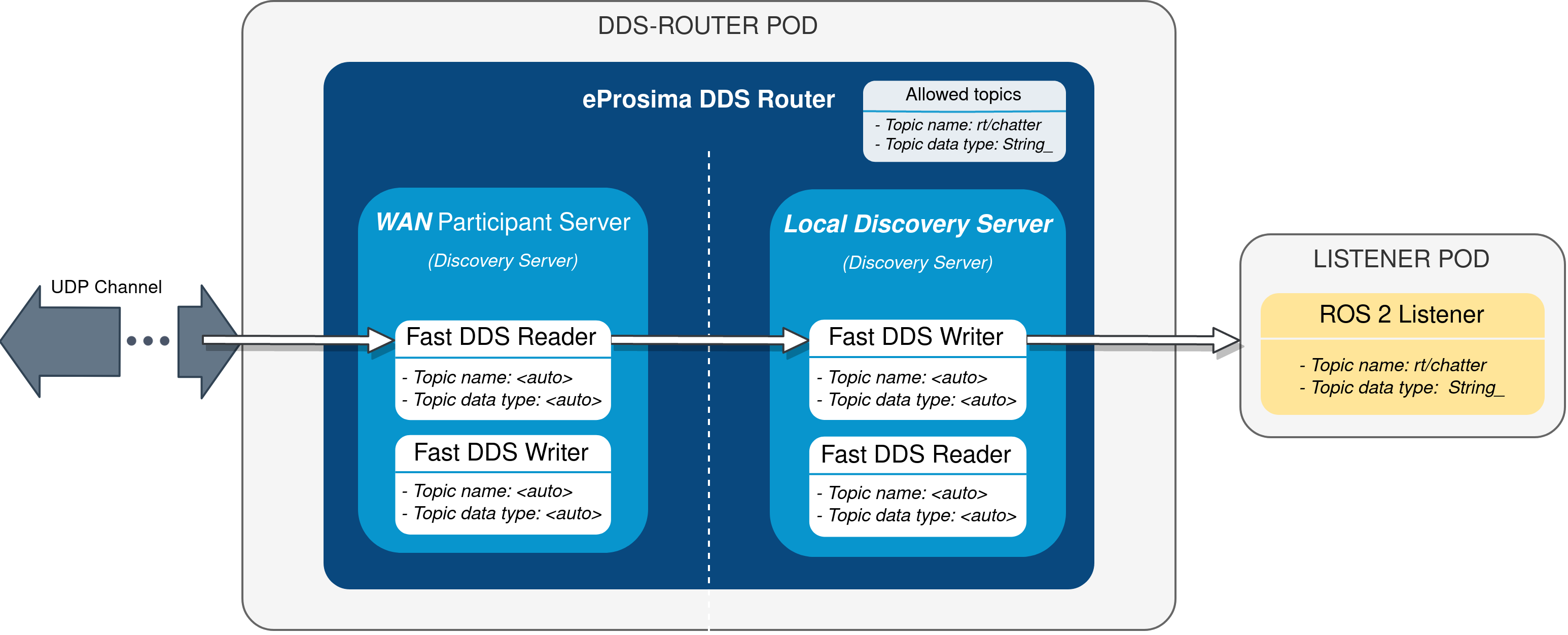

Two different deployments will be used for this example, each in a different K8s pod. The DDS Router cloud instance (cloud router) consists of two participants; a WAN participant that receives the messages coming from our LAN through the aforementioned UDP communication channel, and a Local Discovery Server (local DS) that propagates them to a ROS 2 listener node hosted in a different K8s pod. The choice of a Local Discovery Server instead of a Simple Participant to communicate with the listener has to do with the difficulty of enabling multicast routing in cloud environments.

The described scheme is represented in the following figure:

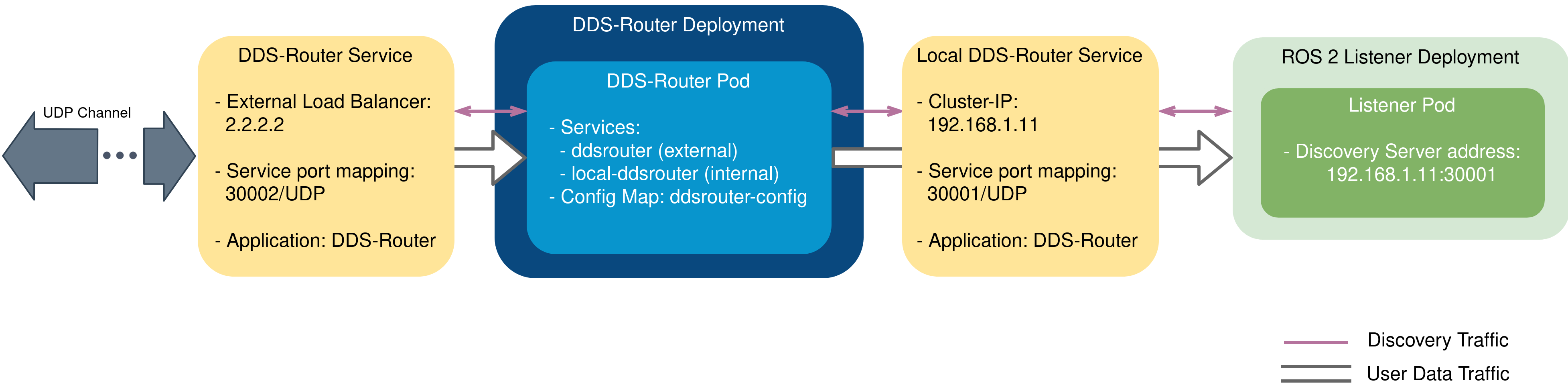

In addition to the two mentioned deployments, two K8s services are required in order to direct dataflow to each of the pods. A LoadBalancer will forward messages reaching the cluster to the WAN participant of the cloud router, and a ClusterIP service will be in charge of delivering messages from the local DS to the listener pod. Following are the settings needed to launch these services in K8s:

kind: Service

apiVersion: v1

metadata:

name: ddsrouter

labels:

app: ddsrouter

spec:

ports:

- name: UDP-30002

protocol: UDP

port: 30002

targetPort: 30002

selector:

app: ddsrouter

type: LoadBalancer

kind: Service

apiVersion: v1

metadata:

name: local-ddsrouter

spec:

ports:

- name: UDP-30001

protocol: UDP

port: 30001

targetPort: 30001

selector:

app: ddsrouter

clusterIP: 192.168.1.11 # Private IP only reachable within the k8s cluster to communicate with the ddsrouter application

type: ClusterIP

Note

An Ingress needs to be configured for the

LoadBalancer service to make it externally-reachable. In this example we consider the assigned public IP address to

be 2.2.2.2.

The configuration file used for the cloud router will be provided by setting up a ConfigMap:

kind: ConfigMap

apiVersion: v1

metadata:

name: ddsrouter-config

data:

ddsrouter.config.file: |-

allowlist:

[

{name: "rt/chatter", type: "std_msgs::msg::dds_::String_"}

]

LocalDiscoveryServer:

type: local-discovery-server

ros-discovery-server: true

id: 1

listening-addresses:

[

{

ip: "192.168.1.11", # Private IP only reachable within the k8s cluster to communicate with the ddsrouter application

port: 30001,

transport: "udp"

}

]

CloudWAN:

type: wan

id: 2

listening-addresses:

[

{

ip: "2.2.2.2", # Public IP exposed by the k8s cluster to reach the cloud DDS-Router

port: 30002,

transport: "udp"

}

]

Following is represented the overall configuration of our K8s cluster:

1.2.1. DDS-Router deployment¶

The cloud router is launched from within a Vulcanexus Docker image, which uses as configuration file the one hosted in the previously set up ConfigMap. The cloud router will be deployed with the following settings:

kind: Deployment

apiVersion: apps/v1

metadata:

name: ddsrouter

labels:

app: ddsrouter

spec:

replicas: 1

selector:

matchLabels:

app: ddsrouter

template:

metadata:

labels:

app: ddsrouter

spec:

volumes:

- name: config

configMap:

name: ddsrouter-config

items:

- key: ddsrouter.config.file

path: DDSROUTER_CONFIGURATION.yaml

containers:

- name: ubuntu-vulcanexus

image: ubuntu-vulcanexus:galactic

ports:

- containerPort: 30001

protocol: UDP

- containerPort: 30002

protocol: UDP

volumeMounts:

- name: config

mountPath: /vulcanexus_ws/install/ddsrouter/share/resources

args: ["-r", "ddsrouter -r 10 -c /vulcanexus_ws/install/ddsrouter/share/resources/DDSROUTER_CONFIGURATION.yaml"]

restartPolicy: Always

1.2.2. Listener deployment¶

Again, since demo nodes is not installed by default in Vulcanexus we have to create a new Docker image adding in this functionality. The Dockerfile used for the listener will slightly differ from the one utilized to launch a talker in our LAN, as here we need to specify the port and IP address of the local DS. This can be achieved by using the following Dockerfile and entrypoint:

FROM ubuntu-vulcanexus:galactic

# Install demo-nodes-cpp

RUN source /opt/ros/$ROS_DISTRO/setup.bash && \

source /vulcanexus_ws/install/setup.bash && \

apt update && \

apt install -y ros-$ROS_DISTRO-demo-nodes-cpp

COPY ./run.bash /

RUN chmod +x /run.bash

# Setup entrypoint

ENTRYPOINT ["/run.bash"]

#!/bin/bash

if [[ $1 == "listener" ]]

then

NODE="listener"

else

NODE="talker"

fi

SERVER_IP=$2

SERVER_PORT=$3

# Setup environment

source "/opt/ros/$ROS_DISTRO/setup.bash"

source "/vulcanexus_ws/install/setup.bash"

echo "Starting ${NODE} as client of Discovery Server ${SERVER_IP}:${SERVER_PORT}"

ROS_DISCOVERY_SERVER=";${SERVER_IP}:${SERVER_PORT}" ros2 run demo_nodes_cpp ${NODE}

As before, to build the extended Docker image it suffices to run:

docker build -t vulcanexus-demo-nodes:galactic -f Dockerfile .

Now, the listener pod can be deployed by providing the following configuration:

kind: Deployment

apiVersion: apps/v1

metadata:

name: ros2-galactic-listener

labels:

app: ros2-galactic-listener

spec:

replicas: 1

selector:

matchLabels:

app: ros2-galactic-listener

template:

metadata:

labels:

app: ros2-galactic-listener

spec:

containers:

- name: vulcanexus-demo-nodes

image: vulcanexus-demo-nodes:galactic

args:

- listener

- 192.168.1.11

- '30001'

restartPolicy: Always

Once all these components are up and running, communication should have been established between talker and listener nodes. Feel free to interchange the locations of the ROS nodes by slightly modifying the provided configuration files, so that the talker is the one hosted in the K8s cluster while the listener runs in our LAN.